8 Questions to Ask When Evaluating the Impact of Student Success Programs

Most academic affairs leaders can readily name dozens of student retention and support initiatives in place on their campus. But which of these initiatives (many of them expensive to maintain) are truly effective? In an environment where nearly everything affects a student’s likelihood to persist, how can we know which particular program or intervention made a meaningful impact on any given individual’s outcomes?

For a number of reasons, this question has been difficult for many of our members to answer:

Methodology: While there are many recognized correlating factors related to student retention and completion (including advisor meetings, involvement in student organizations, and even use of the campus gym), it’s hard to draw any direct causal lines between one activity and a binary student outcome (persisting at the institution) without randomized controlled trials.

Perception: Many view student success programs as intrinsically valuable, making rigorous evaluation politically unpalatable and strategic disinvestment even more so. Given the good intentions of these programs, asking for hard evidence of impact or even a financial return from these programs can seem callous.

Capacity: Institutional research offices are often understaffed, and existing staff are pulled in many different directions. This leaves little room for dedicated or complex analyses of multiple initiatives.

Embedding evaluation into program planning

To foster a culture of continuous improvement, Purdue University’s Office of Institutional Research, Assessment, and Effectiveness (OIRAE) employs an assessment specialist housed in the Office of Student Success. This individual is responsible for coordinating an annual program review process for all central student support units.

Each July, the assessment specialist meets with program directors to review the program evaluation template in detail and recommend changes based on institutional priorities and prior-year performance. Between August and October, program staff and the assessment specialist formulate and revise program evaluations through an iterative process. Program directors must answer the following eight questions in detail:

1. What are the program’s short-term goals? Program goals are designed to be specific, operational, and measurable in order to guide programming. Instead of writing a long-term vision statement, staff must create between four and six concrete, short-term steps that are designed to achieve the program’s mission.

2. What are the desired student learning outcomes? Program staff detail measurable student learning outcomes for their programs. The template emphasizes that learning outcomes should aim to provide equitable engagement opportunities to students across all offerings and sections.

3. How will the program be assessed? All programs must maintain detailed assessment plans for evaluating each learning outcome. Student surveys, demographic analyses, feedback from student leaders, and visits to program websites all serve as assessment methods.

4. How will progress be tracked? Program staff outline specific data points, charts, graphs, and qualitative information sources to demonstrate their progress towards improving outcomes. In this section, staff compare program outcomes against control groups to reveal performance gaps.

5. What changes do staff recommend based on the data? Programs describe notable changes to program design, staff and budgetary allocations, marketing strategies, learning outcomes, and assessment methods from the previous year. Staff also propose improvements to address areas of underperformance in the previous year.

6. How will the program impact student success? Perhaps the most important question: staff must describe how the program aims to positively impact student retention, progression, engagement, and completion. The assessment template asks program staff to evaluate performance on the Purdue-Gallup Index, a measure of student engagement and well-being at the institution. Download the eight questions to share with your team

7. How does the program align with institutional goals? In this section, program staff connect the program’s goals and student outcomes fields to broader institutional strategic goals.

8. What programs at peer institutions will serve as benchmarks? Program staff select at least one other student success program at a peer institution to benchmark against. In the template, staff describe why they chose those programs and particular characteristics or practices they are hoping to replicate in order to achieve similar positive outcomes.

Highest-impact practices pitched to units for replication

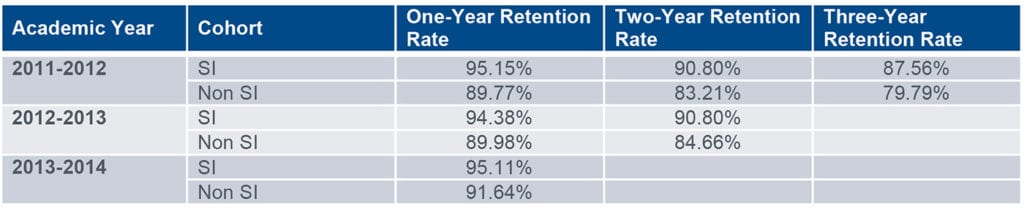

While Purdue does not use program evaluations as a blunt guide to investing or disinvesting in their various student success initiatives, they do encourage expansion of those initiatives that demonstrate the largest impact. This year, student success leaders focused on scaling supplemental instruction (SI) because of its demonstrated impact on student retention. Using card swipe technology, the assessment specialist was able to determine utilization and attendance rates of SI sessions by days and times. Based on these analyses, academic units have reformed and expanded their SI sessions to meet student demand.

Retention Rates for Attendees and Non-Attendees in SI Sessions

Calculating a program’s return on investment (ROI)

Some institutions have used program cost data and associated tuition revenues to determine the ROI of particular programs, using tools such as this one from the Delta Cost Project. Detailed cost accounting and retention projections can both arm program leaders with the information they need to justify additional investment and help the administration prioritize resources across campus in the face of tightening purse strings.

Quantitative metrics only the start of the conversation

Program leaders are understandably wary of evaluations that focus solely on one or two key indicators, be they financial or academic in nature. Rather than using an ROI calculation or retention analysis to benchmark all program investments against one another, most progressive institutions are leveraging these data as the start of an intentional conversation about improving and refining student success efforts on campus. The evaluation process itself provides a rare opportunity for staff to reflect on strengths and weaknesses and articulate specific, measurable outcomes that their work can drive across the institution.

This resource requires EAB partnership access to view.

Access the research report

Learn how you can get access to this resource as well as hands-on support from our experts through Strategic Advisory Services.

Learn More