Early AI Governance Trends at Colleges and Universities

Increasingly, higher ed leaders are embracing AI initiatives across campus, experimenting with new tools, and evaluating the potential of emerging technologies. Higher ed leaders recognize the importance of AI governance, but only some have taken the first steps to establish an AI governance structure.

Our EAB research team interviewed over 30 chief information officers (CIOs) and AI leaders to learn about the first steps higher ed leaders are taking to approach AI governance and how newly established AI committees have achieved early wins.

Establish AI governance structure

The ideal structure of an AI governance committee depends on the existing knowledge of AI champions on campus and enthusiasm to deploy AI tools. Several institutions have opted to build AI governance structures separately from existing IT governance, while acknowledging the inherent relationship between the two.

Typically, leaders in higher ed institutions build AI governance committees with cross-functional members to develop guidelines, align AI principles with institutional goals, and steer AI adoption.

Babson College separates AI working group from traditional IT governance

Babson College created a multi-tiered AI governance system that includes a steering committee within the President’s Council and a working group, distinct from their normal IT governance groups. The President’s Council steering committee comprises senior leadership, including several AI champions including the vice president of innovation, chief marketing officer, and the CAO/CFO.

The steering committee addresses concerns related to how Babson can review and prioritize new AI requests, how academics and administrators can securely use AI tools, and how AI can be embedded into the curriculum to improve the student experience. In partnership with the steering committee, the 15-member AI working group comprised of staff and faculty vets new AI initiatives and technology and solicits feedback from three subgroups: 1) risk and security, 2) operations, and 3) academics.

Dickinson College plans to integrate AI governance into existing IT governance infrastructure

Dickinson College enabled a short-term Presidential Working Group to kickstart AI integration. By the end of the academic year, this group is scheduled to integrate into Dickinson’s existing IT governance structure because it is well-positioned to vet vendors for security, manage compliance, and support change management. However, the working group will make a final recommendation in favor of or against this initial plan based on the results of this trial period.

Dickinson’s cross-departmental group of administrative staff, faculty, and students deliberately comprises a mix of AI champions who are excited about the potential of AI and AI skeptics who harbor serious concerns. The working group’s diverse perspectives have helped balance decisions on ethical and responsible AI adoption, supporting a comprehensive approach to AI enablement.

Set the foundation for AI adoption tied to institutional goals

In a recent survey, 81% of college presidents reported that they had yet to publish an AI policy to guide teaching and research. As a result, three in 10 students are unclear on when they are permitted to use GenAI in their coursework and only 23% of faculty and staff at four-year colleges agree that their colleges are prepared for AI-related changes.

Several higher ed leaders we interviewed use AI governance committees to establish AI principles, guidelines, and/or policies. AI principles serve as overarching, high-level foundational values that explain the relationship between AI and institutional goals.

On the other hand, AI guidelines provide practical application and specific recommendations and/or requirements for AI use. Instead of developing separate guidelines, some institutions have opted to update existing acceptable use, data governance, computer network security, and similar policies to include AI user guidance.

Elon University ties AI principles to institutional goals

Elon University established an AI working group in fall 2023 to promote ethical implementation of AI initiatives and integrate AI into the culture of the institution. This 30-member cross-departmental working group of provost-vetted, self-motivated students, staff, and faculty has five subcommittees that report directly to the president.

This group established six guiding principles for AI integration, intended to serve as a framework as the institution begins to implement AI. Namely, they prioritize people over technology as the center of their work, the enhancement of teaching and learning, digital inclusion, information literacy, and responsible use of AI in research and development.

“We advise provosts and the president on priorities and initiatives so AI can be implemented in an ethical way. Our goal is to ensure that as we adopt these technologies, we are putting humans first and using technology as a tool to enhance education, not replace it.”

– Dr. Haya Ajan, Associate Dean of the Love School of Business

Russell Group focuses on AI literacy in principles

24 vice-chancellors in the Russel Group endorsed a set of AI principles to guide students, staff, and faculty’s AI literacy. These principles state expectations to increase AI literacy including equipping staff with AI guidelines, adapting teaching and assessments for ethical and equitable AI use, and maintaining academic integrity through collaboration.

York University links AI principles, guidelines, and an assessment for use cases in one resource

York University’s AI Round Table, led by the CIO and vice-provost of teaching and learning, facilitated the development of AI principles and guidelines aligned with institutional direction. They opted to develop guidelines over policies to provide structure while maintaining flexibility as AI technology evolves.

Their AI guidelines feature a Rapid Triage assessment for permissible AI use cases, as well as detailed assessments for more complex use cases that require support from IT, information security, legal, and privacy teams.

Butler University updates roadmap for incorporating GenAI in curriculum

Butler University’s 11+ member GenAI committee, established by the provost’s office, is charged with developing guidelines for the use of GenAI in teaching and learning. They created a roadmap for faculty that outlines the initial steps for incorporating GenAI into the curriculum, which will be reviewed every six to 12 months.

University of Arizona develops AI syllabus guidelines for faculty

University of Arizona’s hundred-member AI Access and Integrity Working Group developed an AI Syllabus Guideline for faculty. The guide provides language that faculty may include to help students navigate the use of AI in each class.

-

How to craft an AI acceptable use policy

By creating acceptable use policies for generative AI, IT leaders can educate their campus about AI tools and establish terms of usage to protect against serious risks.

Surface successful AI use cases and develop solutions

Once an AI governance committee has been formed and AI principles, guidelines, and/or policies are established, the role of an AI governance committee is to help make the most effective use of AI by surfacing successful use cases and developing solutions to AI concerns on a regularly occurring basis.

One concern that has surfaced through AI governance committees is the need for more AI training and education. 92% of provosts said faculty or staff asked for additional training related to developments in generative AI and 70% of higher ed administrators reported that they desire training programs.

Colorado State University surfaces AI use cases through monthly sprints

Colorado State University’s 50-member two-campus task force for AI is conducting three one-month sprints: 1) discovering the status of AI, 2) evaluating opportunities and problems, and 3) proposing recommendations to jumpstart the integration of AI practices, processes, and technology. After the third sprint, five subcommittees will propose one to three solutions each for AI use in the following areas: teaching & learning, research, productivity, communities of practice, and innovation.

Notably, task force members must check communications channels (i.e., Microsoft Teams) daily and commit to three hours of synchronous work and five hours of asynchronous work per month to make progress toward their proposals.

Pace University leads training to enhance the effectiveness of AI course instruction

Pace University’s AI committee leverages the institution’s 27-year history of AI research to build faculty training on how to effectively teach AI. Specifically, the CIO, in partnership with co-chairs from the computer science department, led the AI committee as they changed the curriculum of computer science, a core-required course, to include AI. Then, they developed six weeks of modules for faculty to learn how to teach the new material which will be updated every six to 12 months.

In addition to integration of AI into the core curriculum, the AI committee is launching open-forum user groups of faculty, staff, and students to test and experiment with AI tools in the coming months. These user groups will help surface additional use cases, successes, and failures across campus.

More Resources

Compendium of AI Applications in Higher Education

3 Ways Higher Ed IT Leaders Are Socializing AI Use Across Campus

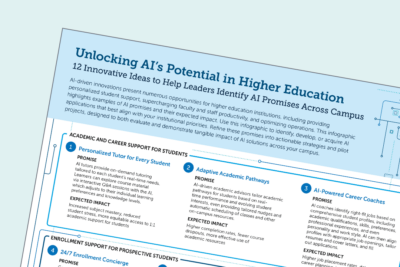

Unlocking AI's potential in higher education

This resource requires EAB partnership access to view.

Access the research report

Learn how you can get access to this resource as well as hands-on support from our experts through IT Strategy Advisory Services.

Learn More