5 takeaways from EAB’s AI-focused Presidential Experience Lab at NVIDIA

In June, nearly 50 presidents joined EAB in Santa Clara for the 2024 Presidential Experience Lab (PEL). This year’s PEL focused on how AI is reshaping the world of work and higher education. It took place at the NVIDIA headquarters and featured speakers like NVIDIA cofounder Chris Malachowsky, Burning Glass Institute’s Matt Sigelman, and Paul LeBlanc from Southern New Hampshire University (SNHU).

The event inspired presidents to think about how AI could transform their institutions, from curriculum to research to operations. For partners that missed it, here are five takeaways from the two-day experience.

1. The advent of AI into higher education creates a mandate for leaders to act

Unsurprisingly, many PEL speakers believe that AI will transform society. And while experts left room for disagreement, they did not leave room for presidents to ignore the moment. George Siemens, Chief Scientist at SNHU and Human Systems, was most explicit: he said presidents can either say AI is the biggest thing that has ever confronted society, or they can say that it will do nothing. But first, they must examine AI closely.

Yet many leaders continue to exist in a state of AI uncertainty. A small subset of leaders are enthusiastic about its potential. Far more are waiting for a clear signal from industry partners and government that it’s time to move on AI. Universities cannot afford to passively wait for others to determine the pace of AI deployment. Presidents not only need to understand AI, they must try it for themselves so they can comfortably engage in conversations about the right AI strategy for their institution. By taking an active role, universities can ensure that AI advancements align with their values and mission, fostering innovation and responsible use.

-

Next step

Presidents must ask themselves: what changes are possible and appropriate for your students and institution?

2. Universities should allow for AI experimentation while also creating structures for how tools are deployed—and they must do both

Presidents must balance experimentation with structure in how AI is deployed on campus. The University of Florida (UF) offers a strong model for this. The two components that allowed Florida to initially drive AI experimentation were:

- A strong senior-level advocate in the form of Joe Glover, former provost

- A centralized working group that convened weekly to track progress on AI initiatives; this VP-level group includes the CIO, Advancement, External Relations, and several deans

This led to a complementary strategy of embracing diffusion of AI across the curriculum. On one hand, UF now offers a three-course AI certificate that any student can complete, but it also allows academic leaders to drive experimentation and development at the college and department level. This freedom enables faculty to do what’s right for their discipline—and evolve it over time.

To drive AI experimentation, Paul LeBlanc encouraged presidents to carve out a team to design an academic program unconstrained by how things are done today. Ignore roles, titles, and accreditation standards and consider: what would a truly human-centered offering look like? This exercise may enable faculty to embrace AI-driven academic innovation.

EAB is facilitating a series of AI strategy and tabletop exercises with university leadership teams, cabinets, and AI steering committees to help inspire bolder and more forward-leaning thinking. Learn more about our AI Strategy Workshops.

-

Next step

Embrace a dual approach of creating centralized structures to drive AI strategy and implementation while also allowing for experimentation across campus.

3. It won’t take a million-dollar supercomputer to get started on your AI journey

Because AI is a powerful tool with unlimited applications for all stakeholders, presidents were open to championing efforts back on campus to secure a supercomputer. A few even noted that it would make a concrete and potentially appealing fundraising goal. But Malachowsky shared that it took UF nearly three years before the supercomputer was broadly used in research applications. Institutions seeking an immediate impact from generative AI are not likely to see one from simply purchasing a supercomputer.

Nor is a physical supercomputer the only way to secure computing power. NVIDIA, for instance, sells cloud-based computing capacity. UF has opened access to its supercomputer to other institutions in the state of Florida and across the Southeastern Conference (SEC). However, a more feasible starting point is focusing on enhancing AI literacy amongst faculty and staff.

Increasing comfort and familiarity with AI tools is the foundation for integrating AI into the curriculum. Institutions seeking to do so can use EAB’s maturity model to move on early-maturity activities before aiming toward higher-maturity activities like comprehensive AI curriculum and support.

Goal: Incorporate AI into the curriculum to prepare students for the future of work.

- Tier 1: Basic awareness about public AI tools – Students and faculty have participated in at least one institutionally-hosted workshop on how to use public AI tools (e.g., ChatGPT) without compromising sensitive data (e.g., PII, confidential information). They have the critical thinking skills to evaluate efficacy of AI outputs, including AI inaccuracy and bias.

- Tier 2: AI integrated into coursework – Students are regularly using AI tools in coursework within the bounds of instructor guidelines (e.g., brainstorming aid, drafting papers).

Faculty assign AI-first assignments (e.g., critiquing ChatGPT outputs) where students have opportunities to work with AI tools in a structured way. - Tier 3: AI curriculum in major disciplines – Students in major disciplines outside of computer science takes at least one AI-related course in their discipline (e.g., AI in Agriculture and Life Sciences, Business Applications of AI, AI in Public Health). Faculty in major disciplines are trained on how to create AI-centric courses.

- Tier 4: Required AI expertise for students in every discipline – Every student builds the requisite expertise to apply AI in their fields, including participation in required co-curricular applications of AI. All students have access to the infrastructure and guidance needed to experiment, prototype, and showcase their AI-driven innovations at scale.

Interested in seeing EAB’s full maturity model? Review four tiers of maturity for higher education’s big opportunities to embrace AI.

What does this look like in practice? For faculty, this means offering AI literacy courses and workshops. Georgetown University launched a donor-funded initiative to incentivize faculty to embrace AI tools in their courses, ultimately funding 40 projects in early 2024. And many universities, including Miami University, have hosted symposiums to convene internal and external AI experts.

-

Next step

Don’t start with costly infrastructure investments. Instead, consider AI literacy courses, faculty workshops, and even seed funding all designed to increase faculty comfort and adoption of AI.

4. AI can serve to reinforce the value of the liberal arts, not threaten it

Some speakers, including Paul LeBlanc and George Siemens, exhorted leaders to embrace the “human” skills, ones that humankind will not lose in an AI “arms race”. Matt Sigelman from Burning Glass Institute gave presidents a new lens for the universal vs. technical skills distinction: what timely skills have a shelf life, and which timeless ones will always be in demand? Burning Glass research has found that on average, 37% of a job’s skills change in a five-year period. But getting an early read on timely skills may not be obvious. Sigelman used the actuary role to illustrate this.

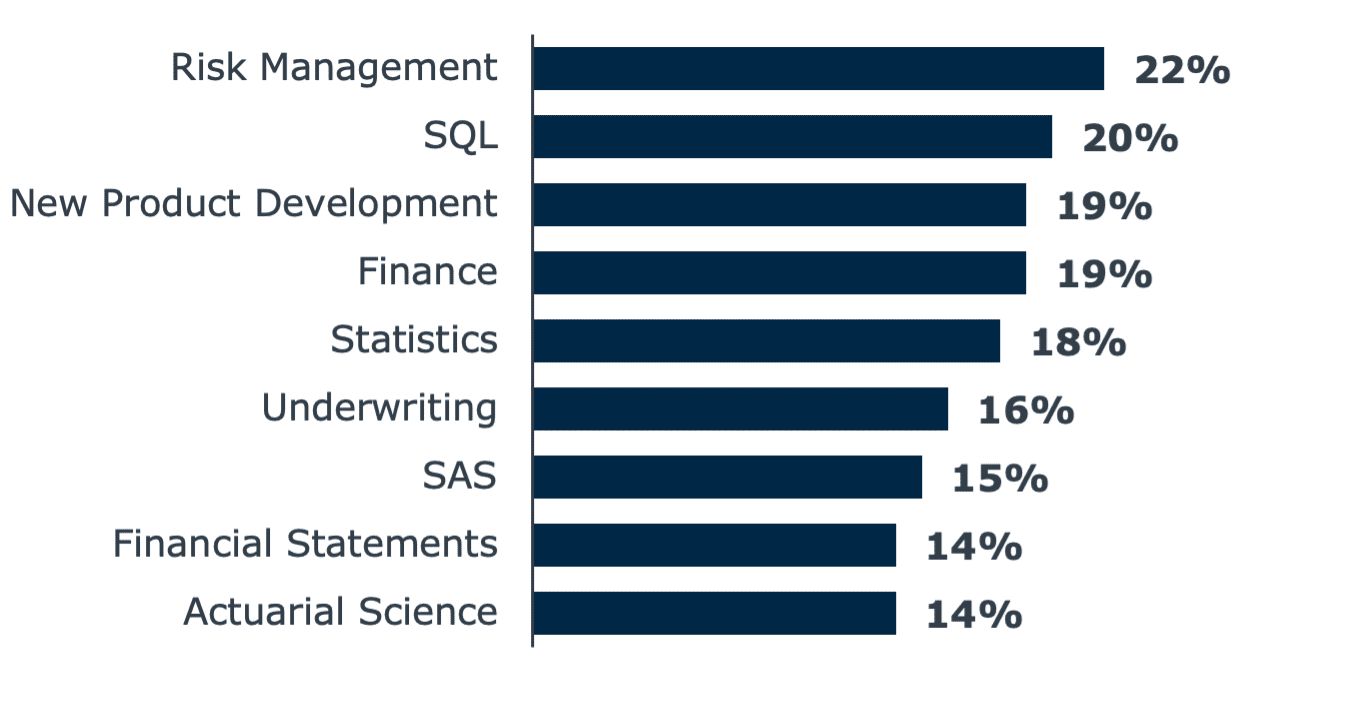

Skills for Actuaries (All)*

Percentage of roles requiring skill

Several skills needed by actuaries without machine learning (ML) skills—risk management, finance, statistics, and actuarial science—are no longer even sought from those with ML skills.

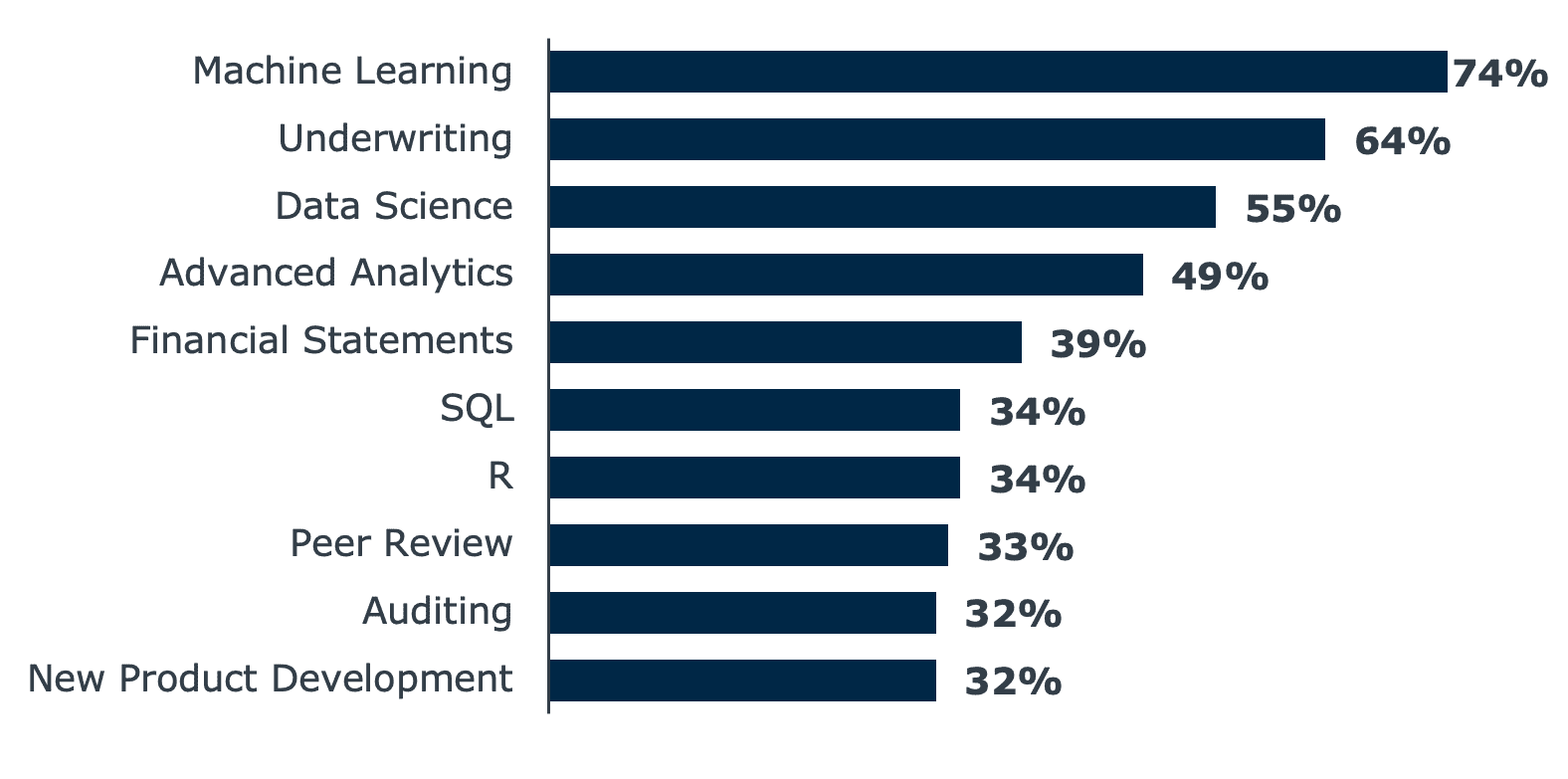

Skills for Actuaries with Machine Learning (ML) Skills*

Percentage of roles requiring skill

In their place are brand new skills—data science, advanced analytics, advanced programming languages like R, and peer review—that point to a transformation of the job itself for actuaries with ML skills.

*©2024 Burning Glass Institute

The first chart illustrates the skills employers seek from all actuaries. The second chart highlights the skills for actuaries with machine learning skills. The first chart may suggest that actuarial positions have not evolved in response to AI-driven responsibilities. The second chart reveals a different picture: machine learning-focused actuary roles have changed so much that there is little duplication in skills required between the two.

Ultimately, Sigelman advised presidents that preparing students for AI should include considering what timely skills learners need. But this is also a moment for institutions to double down on a liberal arts identity. Relevance does not require just embracing timely skills but also taking an expansive view of timeless skills to include things like data analysis, coding, and project management.

-

Next steps

Communicate to stakeholders that embracing generative AI doesn’t mean stepping away from a liberal arts identity. Encourage faculty leaders to revisit what constitutes both timely and timeless skills in their respective disciplines and prepare students to articulate these skills and their value when entering the workforce.

5. AI risks are real, but most early-stage pilots (e.g., chatbots) pose minimal risk because they typically involve limited amounts of data

Shuman Ghosemajumder, CEO of the AI and cybersecurity firm Reken, gave many examples of where generative AI tools like ChatGPT can get things wrong, such as making up plausible-sounding publications in a worker’s CV. He also described how AI detection tools like GPTZero can give users a false sense of security about their ability to detect real vs. AI-generated content. For instance, he showed how the abstract from a recent Nature study produced a GPTZero result of 100% chance of being AI-generated. This illustrated a very real concern that the confidence AI detection tools offer may give users a false sense of security.

More urgent for higher education leaders considering whether and how to embrace AI is the reality that most early-stage pilots involve limited data and therefore limited risk. Use cases like chat-based platforms and assessment tools pose less exposure risk because they tend to involve less (and less sensitive) data. For instance:

-

Teaching and Learning

Use Case: KEATH.ai, developed by the University of Surrey researchers, is a personal AI assessment assistant that is designed to grade essays and provide detailed, real-time feedback across many fields and languages.

Impact: KEATH.ai can accurately grade essays ranging from 1,000 to 15,000 words, claiming a baseline accuracy of 85%. With ongoing training and optimization, accuracy is expected to reach up to 95%.

Risk: Limited; tool was trained on previously graded assignments.

-

Student Support Services

Use Case: The University of Galway created Cara, an AI-powered student engagement platform that provides 24/7 support to students by answering common questions on topics from bike parking and classroom locations, to mental health resources.

Impact: As of October 2023, Cara has answered more than 30,000 queries, resolving 91% of questions without having to route to a human at Galway.

Risk: Limited; Cara leverages information already gathered by student services to free up human support staff capacity.

For more AI case studies, check out the Compendium of AI Applications in Higher Education.

Still, universities must ensure that homegrown AI efforts and applications are safe and secure. This requires coordination with the CIO’s office and adhering to existing policies and processes around cybersecurity, vendor relationships, and governance. And while presidents may see the need to increase investment in cybersecurity and AI infrastructure to enhance security, they can also exert more “soft power” to enforce existing policies and processes, such as requiring annual cybersecurity training.

-

Next steps

Connect with IT and cybersecurity leadership to ensure the institution’s data is protected and that those teams have the resources they need to build out appropriate AI infrastructure.

More Blogs

Inside our Presidential Experience Lab at OpenAI

Building an AI-ready university